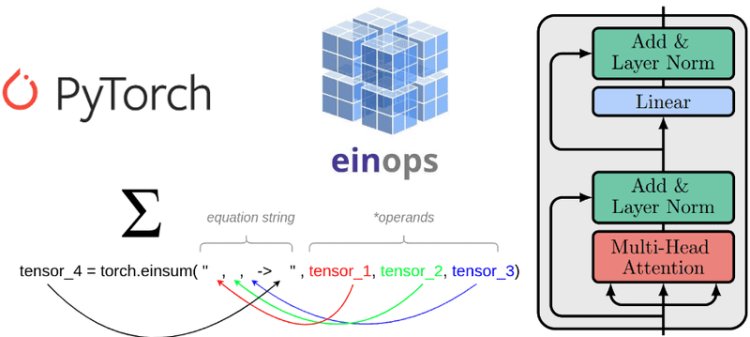

Understanding einsum for Deep learning: implement a transformer with multi-head self-attention from scratch

Learn about the einsum notation and einops by coding a custom multi-head self-attention unit and a transformer block

What's Your Reaction?

![[Computex] The new be quiet cooling!](https://technetspot.com/uploads/images/202406/image_100x75_6664d1b926e0f.jpg)